In the ever-evolving landscape of web data extraction, you may find yourself pondering the advancements that lie ahead. As technology continues to push boundaries, the future holds promises of enhanced efficiency and accuracy in data retrieval. Imagine a world where AI-driven tools scour the web in real-time, adapting seamlessly to diverse data structures. The possibilities seem endless as businesses seek to leverage these insights for strategic decision-making. But what ethical considerations and challenges might accompany this data-driven future? The answers may surprise you.

AI and Machine Learning in Data Extraction

When it comes to the future of web data extraction, the integration of AI and machine learning technologies stands out as a pivotal advancement. These technologies are reshaping the landscape of data extraction by addressing key challenges faced in traditional methods. Data extraction challenges such as scalability, accuracy, and efficiency are being tackled with the power of AI algorithms that can learn and adapt to various data structures and sources.

The future applications of AI and machine learning in data extraction are vast and promising. With the ability to handle massive datasets in real-time, these technologies enable organizations to extract valuable insights from web sources efficiently. They can also enhance data quality by automating the extraction process and minimizing errors that may occur in manual extraction methods. Furthermore, AI-driven data extraction opens up possibilities for predictive analytics, trend forecasting, and personalized user experiences based on extracted data.

Real-time Data Extraction Technologies

Real-time Data Extraction Technologies are revolutionizing the way data is retrieved and utilized. Instant Data Delivery ensures that you have access to the most up-to-date information at all times, enhancing decision-making processes. Automated Scraping Tools streamline the extraction process, saving time and increasing efficiency in web data extraction tasks.

Instant Data Delivery

To ensure the seamless and real-time delivery of data, organizations are increasingly turning to cutting-edge technologies for instant data extraction. This approach allows businesses to stay ahead in the fast-paced world of data-driven decision-making. Here are three key aspects of instant data delivery:

- Real-time Data Streaming Capabilities: Instant data delivery relies on advanced data streaming capabilities that enable continuous and rapid flow of information from the source to the end-user, ensuring up-to-the-minute insights.

- Instant Data Integration: Instant data delivery involves the immediate integration of extracted data into existing systems or analytics platforms. This integration ensures that fresh data is available for analysis and decision-making without delays.

- Automated Alerts and Notifications: Instant data delivery technologies often include features that trigger automated alerts and notifications based on predefined criteria, allowing organizations to act swiftly on critical information.

Embracing instant data delivery empowers organizations to make informed decisions in real-time, enhancing agility and competitiveness in today’s data-driven landscape.

Automated Scraping Tools

In the realm of data extraction for instantaneous insights, Automated Scraping Tools stand out as pivotal assets driving real-time data extraction technologies. Data extraction automation has revolutionized how businesses gather and analyze information, enabling rapid decision-making processes. However, ethical considerations surrounding data privacy and web scraping practices are paramount in ensuring responsible use of these tools.

The scalability of automated tools plays a crucial role in handling vast amounts of data efficiently. As businesses increasingly rely on real-time insights for competitive advantages, the demand for scalable scraping solutions will continue to rise. Future trends indicate a shift towards more sophisticated automated scraping tools that can adapt to dynamic web environments and provide valuable data streams seamlessly.

To navigate the evolving landscape of web data extraction, organizations must prioritize ethical data practices while leveraging the scalability and future trends of automated scraping tools. By doing so, businesses can harness the power of real-time data extraction technologies responsibly and effectively.

Web Scraping Efficiency

Efficiency in web scraping is a critical component of real-time data extraction technologies, enabling businesses to swiftly gather valuable insights from online sources. To enhance web scraping efficiency, consider the following:

- Scalability Challenges: Addressing the ability to efficiently scale web scraping processes is crucial as the volume of data increases. Implementing strategies such as distributed scraping or utilizing cloud-based solutions can help overcome scalability challenges.

- Performance Optimization: Optimizing the performance of web scraping tools through techniques like caching, asynchronous requests, and intelligent data parsing can significantly enhance efficiency. By fine-tuning these aspects, you can reduce latency and improve overall extraction speeds.

- Resource Management: Efficiently managing resources like bandwidth, memory, and processing power is essential for maximizing web scraping efficiency. Monitoring and optimizing resource usage can prevent bottlenecks and ensure smooth data extraction processes. By focusing on scalability, performance optimization, and resource management, businesses can streamline their web scraping operations for real-time data extraction.

Big Data Analytics for Web Data

To harness the potential of web data, you must employ advanced data analysis techniques coupled with powerful web scraping tools. By utilizing these technologies, you can extract valuable insights from vast amounts of web data, enabling informed decision-making and predictive analytics. The synergy between data analysis techniques and web scraping tools forms the foundation for effective big data analytics in the realm of web data extraction.

Data Analysis Techniques

Data analysis techniques play a crucial role in harnessing the vast amount of web data available today. To make sense of this data, certain methodologies are essential:

- Predictive Modeling: Utilizing historical data to predict future trends and outcomes is a powerful technique. By applying algorithms to analyze patterns within the data, predictive models can forecast potential developments with a high degree of accuracy.

- Data Visualization: Visual representations of data through graphs, charts, and dashboards aid in understanding complex datasets. Visualization tools enable you to uncover insights, trends, and correlations that might not be apparent from raw data alone. This visual storytelling simplifies decision-making processes and enhances data-driven strategies.

- Machine Learning Algorithms: Leveraging machine learning algorithms allows for the development of models that can automatically learn and improve from experience without being explicitly programmed. This advanced technique enables the extraction of valuable insights from web data, leading to more informed business decisions and strategic planning.

Web Scraping Tools

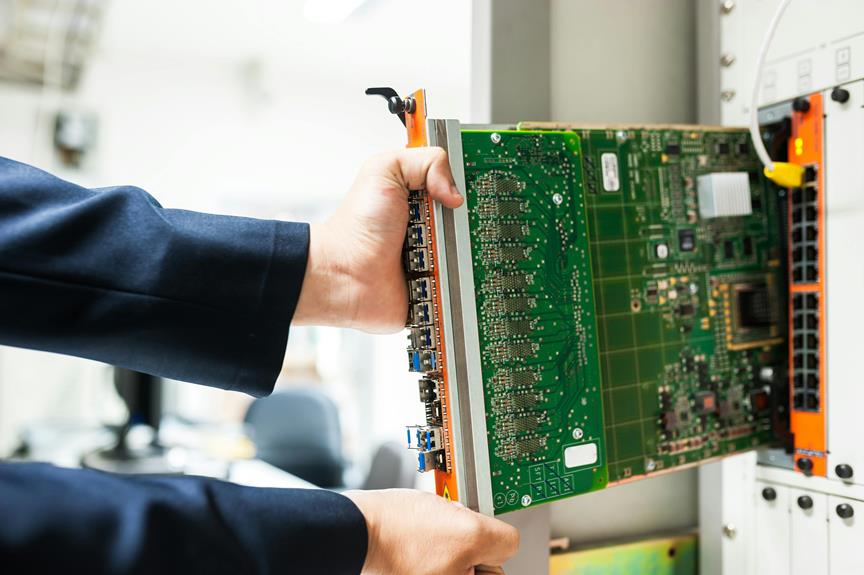

Harnessing the vast amount of web data available today requires the utilization of advanced tools and techniques to extract valuable insights. Data extraction automation plays a crucial role in efficiently collecting and organizing data from websites. Web scraping tools have seen significant advancements in recent years, allowing for more precise and comprehensive extraction of data from various sources on the internet.

These tools enable users to scrape data at scale, collecting information from multiple websites simultaneously. By automating the data extraction process, organizations can save time and resources while ensuring the accuracy and consistency of the extracted data. Web scraping advancements have also led to the development of tools that can handle dynamic websites, JavaScript-rendered content, and complex data structures, making it possible to extract data from a wider range of sources.

Incorporating these sophisticated web scraping tools into data analytics workflows can enhance decision-making processes and provide valuable insights for businesses across industries. As technology continues to evolve, the future of web data extraction will likely rely heavily on these innovative tools to unlock the full potential of web data.

IoT Data Extraction Advancements

How can IoT devices revolutionize the way we extract data from the web? Integrating IoT technology into data extraction processes presents a host of opportunities and challenges. Here’s a look at some key aspects:

- Enhanced Data Security Measures: IoT integration can bolster data security by enabling encrypted data transmission and authentication protocols, ensuring the safe transfer of extracted data.

- Addressing Scalability Challenges: IoT devices offer the potential for seamless scalability in data extraction operations. By leveraging IoT sensors and devices, organizations can efficiently scale their data extraction processes to meet growing demands.

- Streamlining Automation Implementation: IoT devices can automate data extraction tasks by continuously gathering real-time data from various sources. This automation can improve efficiency, accuracy, and timeliness in extracting data from the web.

Data Privacy Regulations Impact

The regulatory landscape surrounding data privacy exerts a significant influence on web data extraction practices. Ethical considerations, data security, compliance challenges, and data protection are paramount in the context of data privacy regulations. Organizations engaging in web data extraction must navigate a complex environment where adherence to stringent guidelines is crucial.

Ethical considerations play a pivotal role in determining the appropriateness of data extraction practices. Ensuring that data is collected and used in a manner that respects user privacy and autonomy is essential. Data security is another critical aspect, as sensitive information gathered from the web must be safeguarded against unauthorized access or breaches.

Compliance challenges arise due to the evolving nature of data privacy regulations worldwide. Adhering to various frameworks such as GDPR, CCPA, or other regional laws requires a deep understanding of the legal landscape. Data protection measures must be implemented to secure extracted data and mitigate risks associated with non-compliance. As the regulatory environment continues to evolve, organizations must proactively address data privacy concerns to maintain trust and integrity in their web data extraction practices.

Cloud-based Solutions for Extraction

Amid the landscape shaped by data privacy regulations, organizations seeking to streamline their web data extraction processes are turning to cloud-based solutions for extraction. Cloud-based solutions offer a host of benefits, including enhanced scalability, flexibility, and efficiency. Here are three key reasons why organizations are increasingly leveraging cloud-based solutions for their data extraction needs:

- Data Security Measures: Cloud providers invest heavily in robust security measures to safeguard data. Encryption, access controls, and regular security audits are some of the features that ensure data remains secure during extraction processes.

- Cost-Effective Solutions: Cloud-based extraction solutions eliminate the need for organizations to invest in costly infrastructure and maintenance. With pay-as-you-go models, organizations can scale their extraction needs according to requirements, making it a cost-effective option.

- Ease of Implementation and Management: Cloud-based extraction solutions are typically easy to implement and manage. With no hardware requirements and automatic updates, organizations can focus on extracting valuable data without the hassle of maintenance and upkeep.

Frequently Asked Questions

How Can Businesses Ensure the Accuracy of Extracted Data?

To ensure accuracy, utilize data validation processes and machine learning algorithms. Regularly monitor and refine extraction methods. Implement quality checks and validation tools within your data extraction pipeline. Continuously train algorithms for improved results.

What Are the Potential Risks Associated With Real-Time Data Extraction?

Real-time data extraction poses significant risks. Data privacy breaches and cybersecurity vulnerabilities are common. With 43% of businesses experiencing a data breach due to web scraping, protecting sensitive information is crucial. Stay vigilant and implement robust security measures.

How Can Organizations Effectively Manage and Analyze Big Data From the Web?

To manage and analyze big data from the web effectively, you must prioritize data governance principles. Implement robust data analytics tools to extract valuable insights. Ensure compliance with regulations, maintain data quality, and optimize processes for informed decision-making.

What Security Measures Are Essential for Iot Data Extraction?

To secure IoT data extraction, implement robust data encryption to protect sensitive information from unauthorized access. Utilize access control mechanisms to restrict data retrieval only to authorized personnel, enhancing overall security of the extraction process.

How Do Cloud-Based Solutions Ensure Data Privacy Compliance During Extraction?

Securing data with cloud-based solutions is like wrapping it in an impenetrable fortress. Encryption shields information from prying eyes while adherence to privacy regulations ensures compliance. Your data stays safe and sound.